King Mongkut's University of Technology Thonburi (KMUTT)

I am a final year undergraudate student KMUTT, Thailand, majoring in Comptuer Science. I am also a student researcher at IC2 Research Center under the supervision of Assoc. Prof. Dr. Jonathan Hoyin Chan.

I am interested in Generative AI, with a particular focus on Diffusion Large Language Models (dLLMs) and reasoning models. I am looking for internship opportunities and research collaborations in these areas.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

King Mongkut's University of Technology ThonburiDepartment of Computer Science

King Mongkut's University of Technology ThonburiDepartment of Computer Science

Undergraduate StudentAug. 2022 - present

Honors & Awards

-

First Runner-up Award at The 16th Internation Cybersecurity and Generative AI Competition2025

-

First Runner-up Award at ASEAN Data Science Explorers National Final2024

-

First Prize at Future of Food Hackathon by Reactor School and Singapore Global Network2024

News

Selected Publications (view all )

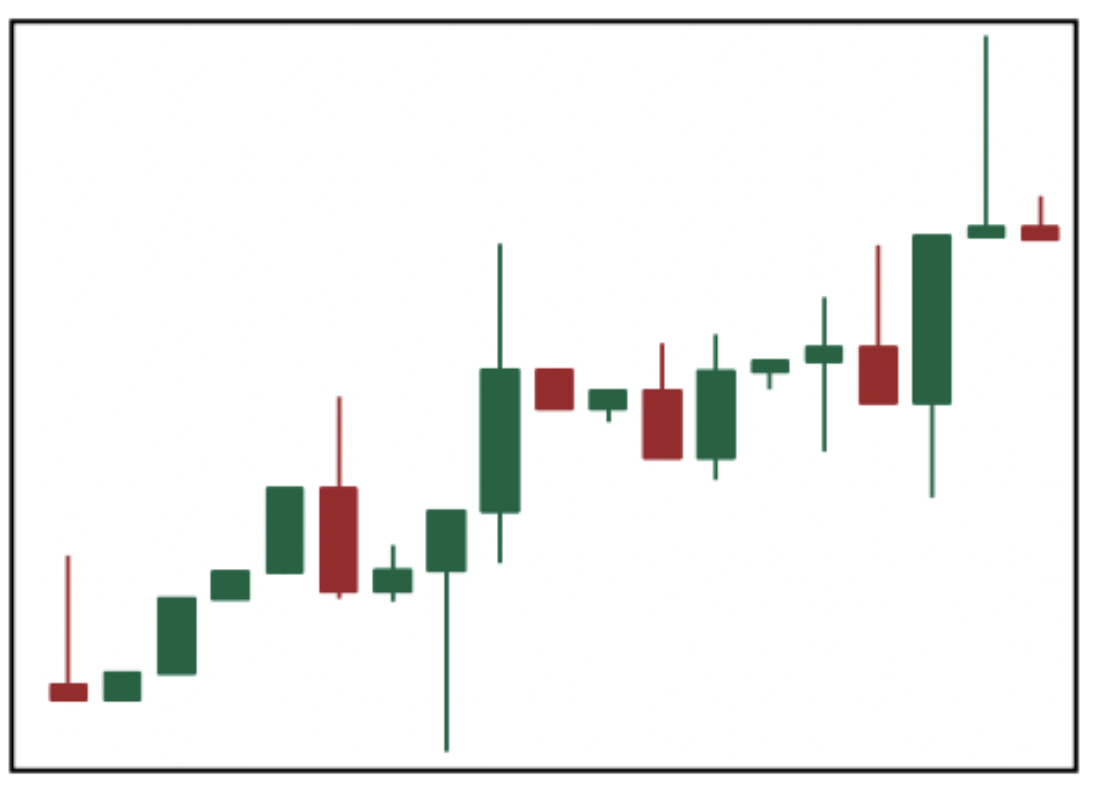

CandleGen: Generating Synthetic OHLC Data for Different Market Trends using GANs

Kaung Myat Kyaw, Jonathan Chan, Udom Silparcha

International Symposium on Information and Communication Technology (SOICT) 2025 In Press

A GAN-based system for generating synthetic OHLC data tailored to various market conditions. By training separate GANs for distinct market states, we captured the unique characteristics of each condition, resulting in synthetic data that mirrors real market behavior. Our evaluations demonstrated that CandleGen preserves the statistical properties and produces realistic samples, making it a valuable tool for applications in algorithmic trading and risk management.

CandleGen: Generating Synthetic OHLC Data for Different Market Trends using GANs

Kaung Myat Kyaw, Jonathan Chan, Udom Silparcha

International Symposium on Information and Communication Technology (SOICT) 2025 In Press

A GAN-based system for generating synthetic OHLC data tailored to various market conditions. By training separate GANs for distinct market states, we captured the unique characteristics of each condition, resulting in synthetic data that mirrors real market behavior. Our evaluations demonstrated that CandleGen preserves the statistical properties and produces realistic samples, making it a valuable tool for applications in algorithmic trading and risk management.

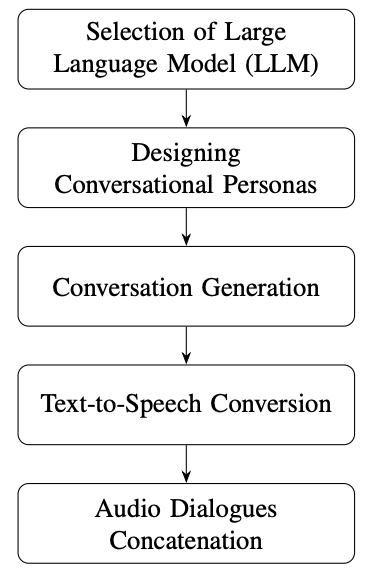

A Framework for Synthetic Audio Conversations Generation using Large Language Models

Kaung Myat Kyaw, Jonathan Chan

International Conference on Web Intelligence and Intelligent Agent Technology 2024

ConversaSynth, a framework designed to generate synthetic conversation audio using large language models (LLMs) with multiple persona settings. The framework first creates diverse and coherent text-based dialogues across various topics, which are then converted into audio using text-to-speech (TTS) systems.

A Framework for Synthetic Audio Conversations Generation using Large Language Models

Kaung Myat Kyaw, Jonathan Chan

International Conference on Web Intelligence and Intelligent Agent Technology 2024

ConversaSynth, a framework designed to generate synthetic conversation audio using large language models (LLMs) with multiple persona settings. The framework first creates diverse and coherent text-based dialogues across various topics, which are then converted into audio using text-to-speech (TTS) systems.